AGI and the Ever Moving Goalposts

For years, the advent of Artificial General Intelligence (AGI) has remained an elusive milestone. Despite the progress made with Large Language Models (LLMs), opinions are divided: some believe that AGI has finally been realized, while others strongly disagree.

Published on November 28, 2023

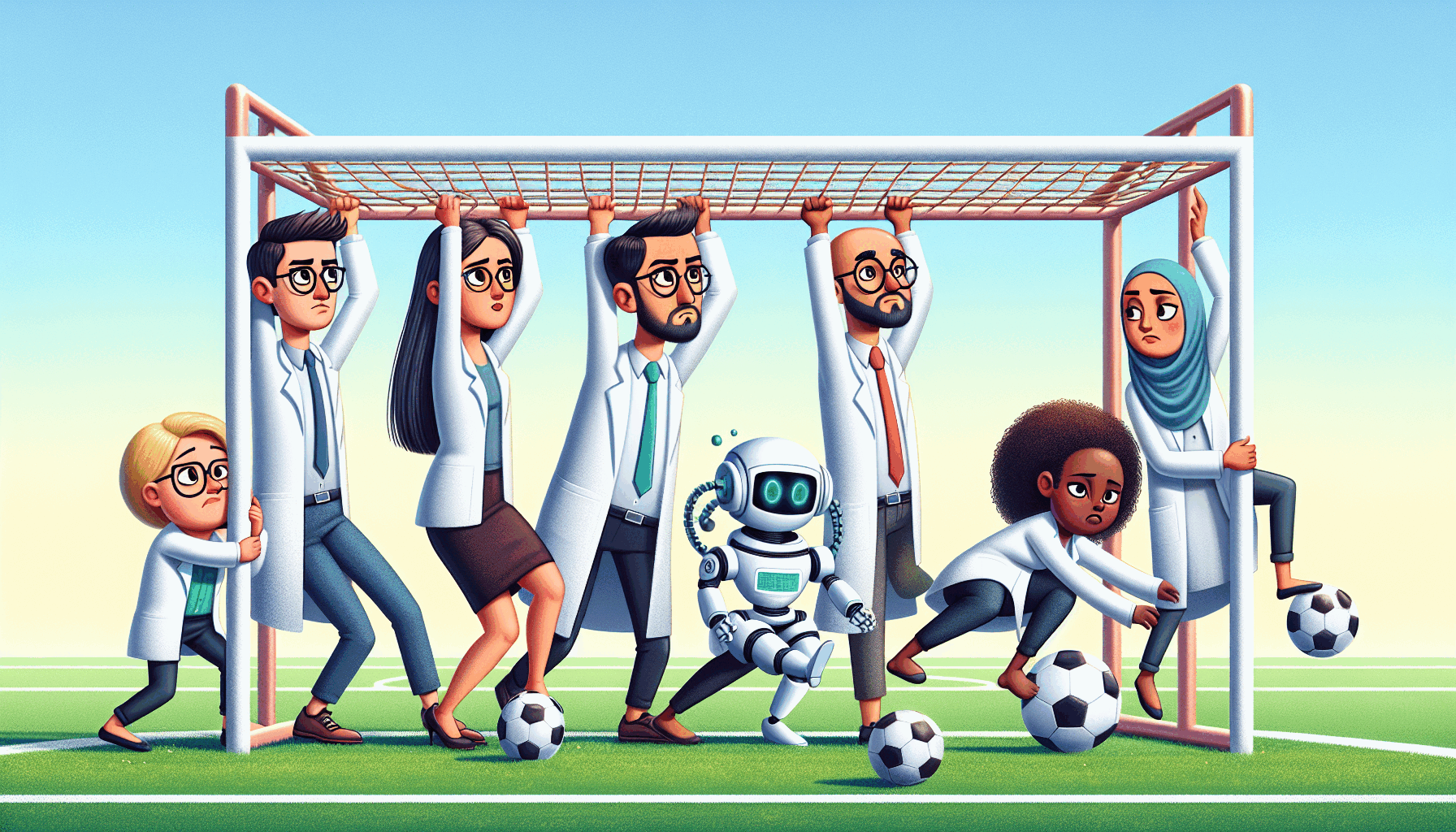

Scientists moving the goalposts.

The inquiry into whether AI technology has achieved Artificial General Intelligence (AGI)—considered the pinnacle of AI research—is significant. This milestone represents a transformative leap with the potential to profoundly disrupt numerous aspects of everyday life.

The remarkable capabilities of Large Language Models (LLMs), especially those like ChatGPT, have led many people to speculate that AI has reached or exceeded the intelligence levels of a subset of the human population. However, when these claims are made, they are frequently met with rebuttals from scientists and researchers, who assert that true AGI has yet to be achieved.

Debates about the attainment of AGI are rife with unresolved questions, not least of which is the definition of intelligence itself. Despite centuries of study, there is no consensus on a universal formulation for intelligence. Nevertheless, there is a broad recognition that intelligence can be identified through observation.

Numerous tests for artificial intelligence have been proposed by scientists, with the Turing test being one of the most well-known examples. However, this test has faced significant criticism, such as the argument presented by the Chinese Room thought experiment. While extensive discussions on this topic occur within the scientific community, only the most high-profile debates tend to reach the general public. Consequently, those not consistently following the latest discourse may be under the impression that definitive tests for artificial intelligence exist.

In debates about whether current AI technology has achieved general intelligence, widely recognized tests are often cited as benchmarks. It can be argued that today's AI technology has indeed passed these tests. Nevertheless, scientists generally do not accept that this constitutes the arrival of general intelligence, leading to frustration among many, particularly those who are proponents of technological progress.

From the viewpoint of those advocating for technological advancement, it seems as if every time they question whether AI has attained AGI status, a new test is introduced. And whenever AI achieves a breakthrough that allows it to pass these tests, yet another set of criteria emerges. To these advocates, it appears that scientists continually shift the goalposts, reluctant to concede that the latest, advanced technology qualifies as AGI.

What these advocates may not fully grasp is that the concept of intelligence remains poorly defined. There are numerous facets of intelligence that are not yet fully comprehended. When a new test is proposed, it often targets what scientists see as crucial shortcomings in current AI technology for achieving general intelligence. As AI passes each test, we often find that these identified weaknesses, while significant, may not be essential conditions for intelligence. Thus, the evolving nature of these tests reflects an ongoing refinement of our understanding of what constitutes true intelligence.

Compounding the issue is that scientific communication has faced significant challenges, particularly since the pandemic, lowering public trust in authorities to possibly historic levels. When groundbreaking, potentially disruptive technologies emerge, many people want to be informed and ready. This desire for preparedness contributes to the dissonance between the scientific community and the general public, as there may be a perception that the discourse on such complex matters is not adequately conveyed to non-experts.

However, this dynamic exchange is a fundamental component of progress when tackling any genuinely complex issue. In the realm of programming, determining the specifications and requirements often presents the most significant challenge. Once these elements are established, the actual coding tends to be relatively straightforward. Similarly, with AGI, the key difficulty may lie in precisely defining what constitutes general intelligence. Once we understand this, the implementation of AGI might, in fact, be the simpler step.

As long as there is no consensus on the definition of general intelligence, AGI remains an elusive goal. Measuring progress toward AGI is particularly challenging; unlike technological advancements, which can often be gauged by extrapolating from current trends, scientific breakthroughs tend to occur in unpredictable spurts and depend on the readiness of all the necessary components. Consequently, any predictions about the timeline for achieving AGI should be approached with caution; estimates could range from a decade to a century or more. What is certain, though, is that current AI technology serves as a powerful force multiplier, a role it is likely to maintain in the years ahead.

Have comments or want to have discussions about the contents of this post? You can always contact me by email.